My AI gave me fake data. Here’s how to catch it if it happens to you.

Or, How to Build an AI Hallucination Detector

I was reading through the Stanford 2025 AI Index when the section on hallucinations reminded me of an experience I had earlier this year when I asked ChatGPT to analyze quarterly sales data for a startup client. It reported 15% growth in Europe. The only problem was that the dataset had no European records, as my client doesn’t operate in Europe.

While the 1.3% hallucination rate the Stanford Index reports seems low, a single hallucination in financial forecasting can cost millions. In fact, this is such a pressing issue that the Big 4 accounting firms are quietly hiring AI Validation Specialists at salaries of $150k+.

Below, I’ve recreated the exact system that caught the $2M forecasting error for my client, using sample data to reveal how even small AI hallucinations (such as this 40% holiday overprediction) signal a bigger risk.

Layer 1: Mathematical validation with Python

Catching calculation errors and fabricated metrics

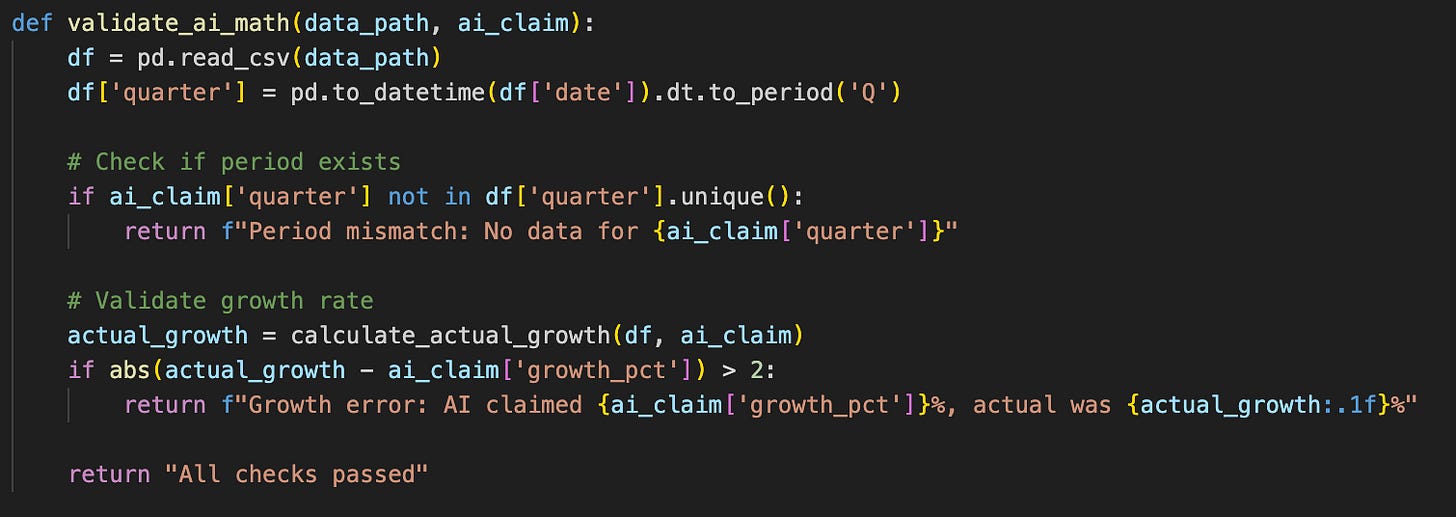

The foundation of this validation system begins with verifying the basic math behind AI-generated claims. Using Python, you can load your dataset and perform essential checks:

I wrote this validation to catch three issues:

Incorrect growth calculations where the AI misrepresents trends

Period mismatches where comparisons don’t align properly

Fabricated metrics that don’t match the underlying calculation

The system flags any discrepancies beyond a 2% tolerance threshold, ensuring minor differences don’t create false alarms while catching significant errors.

Layer 2: Verifying Your Sources

Identifying invented dimensions and segments

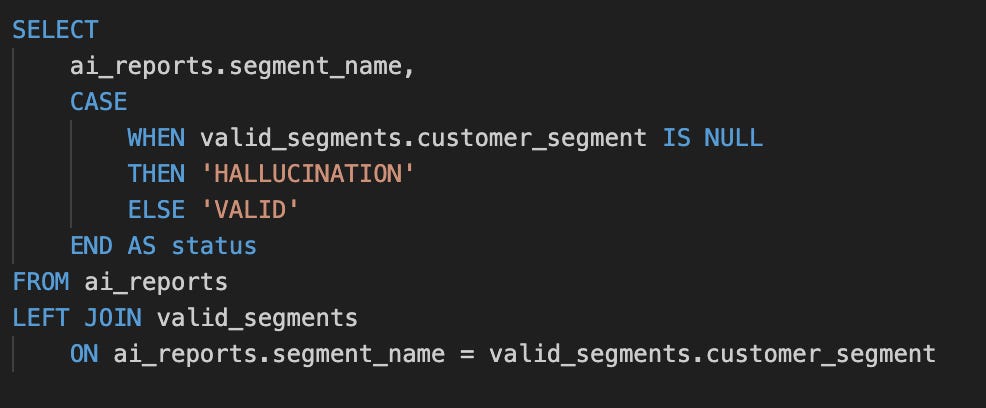

When AI tools hallucinate customer segments, product categories, or geographic regions, best practice is to use SQL to verify their existence.

How does this work in practice?

Any category marked ‘HALLUCINATION’ doesn’t exist in our sales data

The revenue threshold filters out insignificant segments

The query structure adapts easily to verify regions, customer types, or other dimensions

Layer 3: Contextual Auditing

Aligning insights with business reality

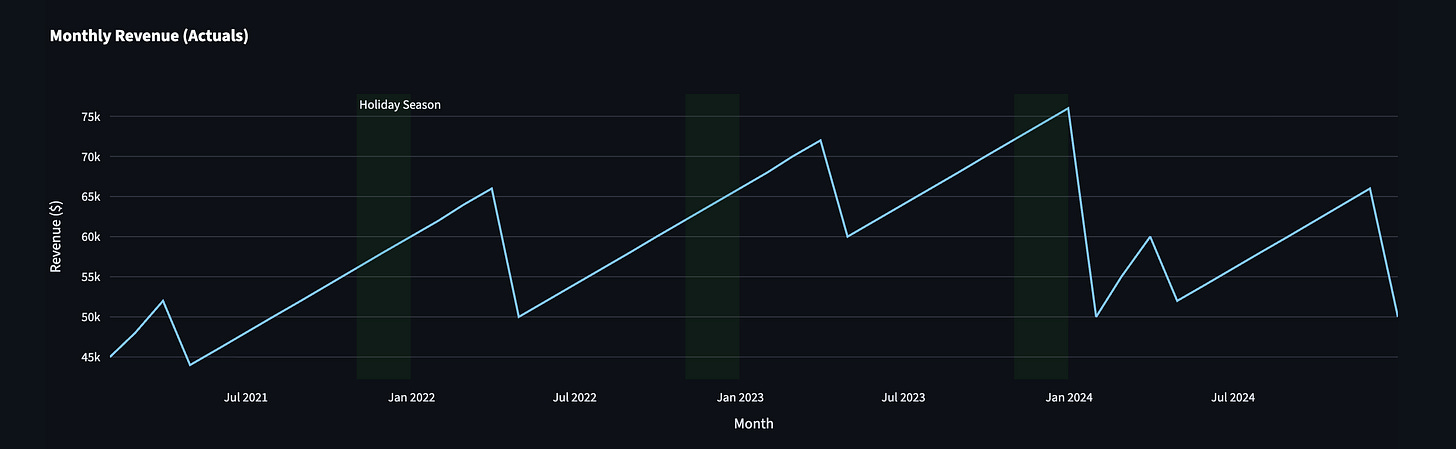

Of course, we wouldn’t be efficient data professionals if we didn’t give our non-technical leadership a way to monitor our progress.

This layer provides business context through visual validation. Our dashboard displays actual revenue trends with historical context, enabling leaders to spot anomalies that automated checks might miss.

The dashboard includes:

Revenue Trend Analysis: Actual performance plotted over time with holiday season highlights

Segment Validation Table: Direct comparison of AI-reported segments against actual business data

Pattern Recognition: Visual cues that help identify when AI insights deviate from historical business cycles

While automated checks catch obvious errors, this visual layer helps humans spot subtler issues that require business context.

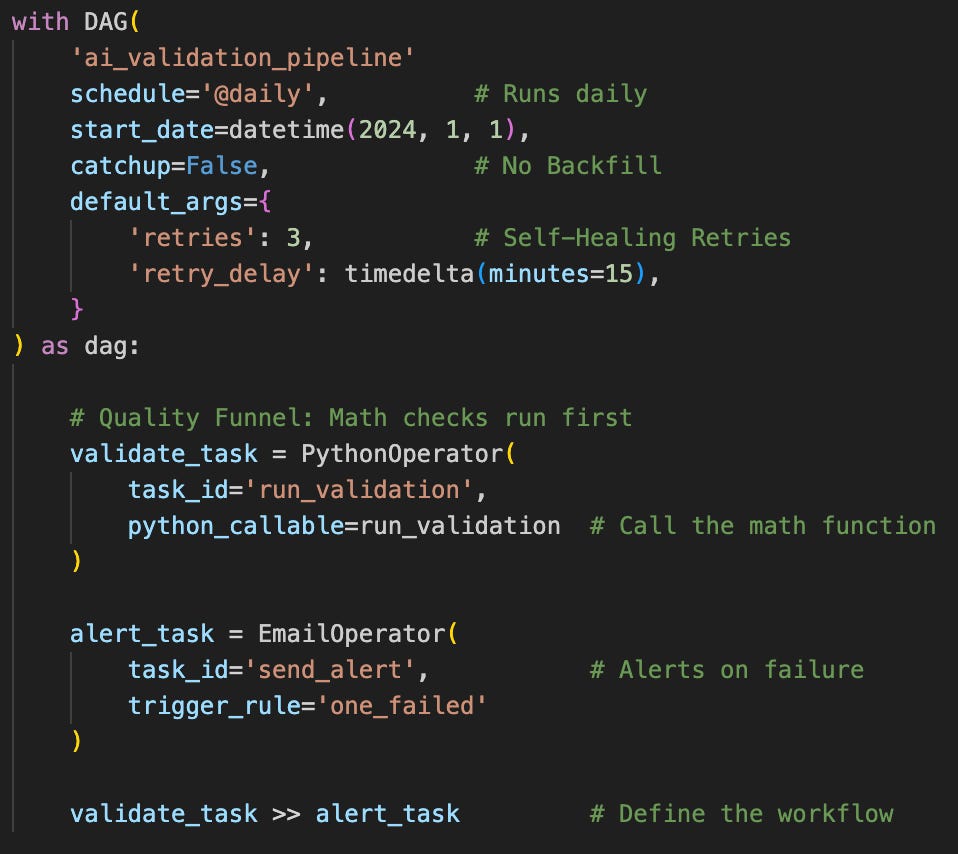

Layer 4: Automated Validation Pipeline

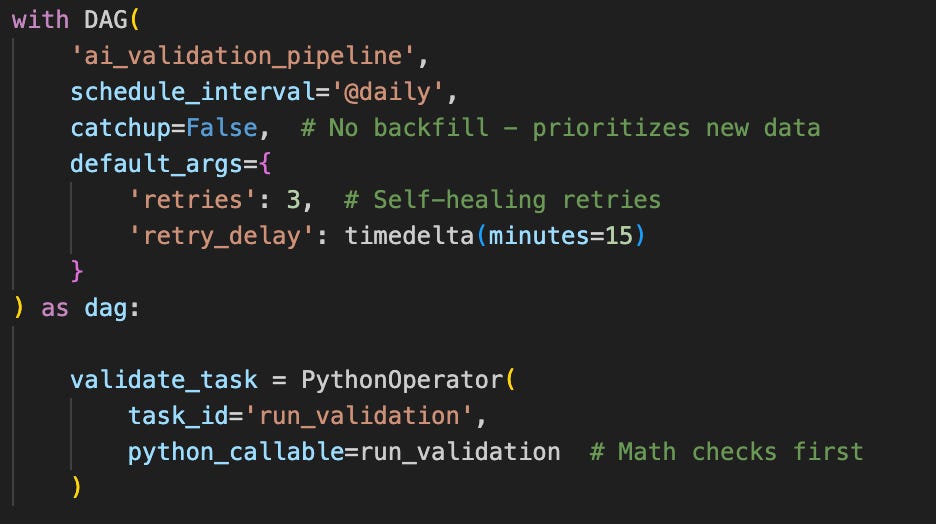

This Airflow workflow runs daily validation without manual intervention. I included three safeguards to ensure operational reliability:

No Backfill: “catchup=False” prioritizes new data, avoiding resource-heavy historical reruns

Self-Healing Retries: 3 automatic retries (15-minute delays) handle temporary glitches like database timeouts

Quality Funnel: Math checks run before source verification. Just like analysts validate calculations before digging deeper.

Failed checks trigger email alerts, while clean reports flow to our validation dashboard for leadership review.

The pipeline executes with three reliability layers:

Generally, it is important to remember that AI mainly depends on pattern recognition. In business, even a 1.3% hallucination rate is too high when real money is involved.

What we’ve built today:

A detection system (Python + SQL) that catches AI’s creative math

A business context layer that surfaces where AI ignores historical trends or operational realities

A safety net (Airflow) that runs silently until it’s needed.

Try this yourself and watch what the model confidently got wrong.

The future isn’t humans or AI, it’s humans governing AI.

Update: The complete validation system is now available as open source code on GitHub. You can run the detection system locally and see the exact business dashboard we built.

Hodman Murad

This has happened to me too!

Couldn't agree more. The key to unlocking AI's potential lies in responsible human governance and oversight.