The Machine Learning Reality Gap

A Practical Maturity Assessment

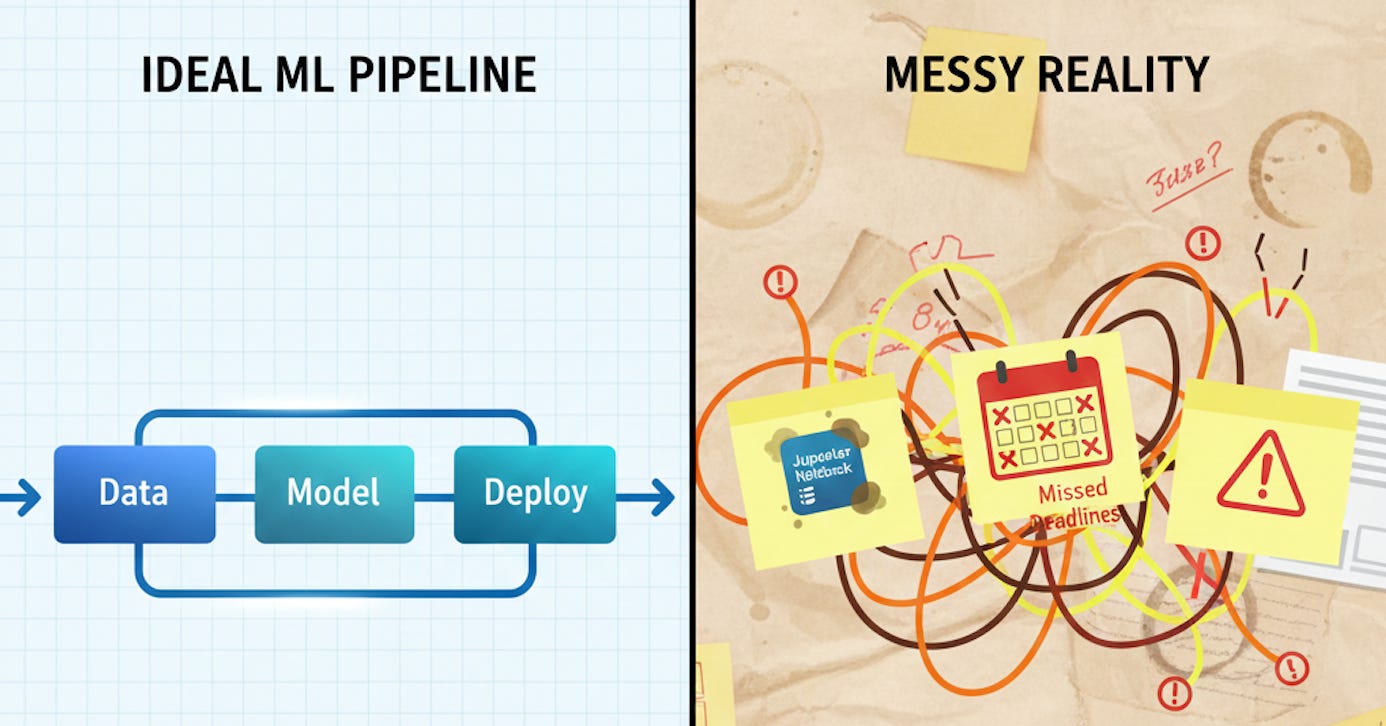

Ask ten Machine Learning teams about their MLOps maturity, and nine will overestimate by at least one full stage. The gap isn’t intentional dishonesty. Without a clear assessment framework, teams conflate their aspirations with their current capabilities.

This mismatch between perception and reality is one of the most persistent problems in the machine learning industry. Teams overestimate their maturity. Organizations buy enterprise platforms before establishing basic version control. Meanwhile, data scientists wrestle with deployment processes that DevOps engineers wouldn’t tolerate for a weekend hobby project.

Teams have plenty of ambition and intelligence. What they lack is clear knowledge of where they actually stand. Without an honest assessment, you can’t build a roadmap. Without a roadmap, you accumulate technical debt at scale. And technical debt in production ML systems can mean degraded predictions, compliance failures, and models that stop working without triggering any alarms.

This article provides a practical framework for assessing your team’s true MLOps maturity and a clear path forward, no matter where you are today.

Why Maturity Matters

The consequences of MLOps immaturity are measurable and painful. Teams operating at low maturity levels experience:

Deployment delays of weeks or months. When every model deployment requires custom engineering work, your data scientists become bottlenecked waiting for production access. Projects that should take days stretch into quarters.

Resource waste at scale. Without understanding which models are actually valuable, teams maintain dozens of stale models in production that consume compute resources but deliver no business value. You’re paying cloud bills to serve predictions that no one uses or trusts anymore, but no one has the confidence to turn them off.

Cross team bottlenecks. Data scientists wait weeks for infrastructure teams to provision resources. Engineers wait days for data scientists to explain model requirements. Every model deployment requires synchronizing three calendars and a video call to clarify what should have been documented. Velocity dies in the handoff gaps.

Security and compliance exposure. Ad-hoc processes mean ad-hoc security. Models access data they shouldn’t, PII leaks into training artifacts, and audit trails don’t exist. In regulated industries, this can be existential.

Conversely, organizations that systematically improve their MLOps maturity see concrete benefits:

Deployment velocity increases by 10x or more. Teams that once shipped quarterly begin shipping weekly. The path from experiment to production becomes predictable and fast.

Model quality improves through iteration. When deployment is easy, experimentation becomes practical. Teams run more A/B tests, iterate faster, and compound improvements over time.

Incidents decrease and resolve faster. Systematic monitoring catches problems early. Automated rollback procedures contain damage. Mean time to resolution drops from days to minutes.

Team satisfaction improves. Data scientists spend more time on modeling and less time wrestling with infrastructure. Engineers spend less time on heroic firefighting. Everyone can focus on the work they were hired to do.

The path from immaturity to maturity is not about buying the most expensive MLOps platform. It’s about systematically building capabilities in the right order.

Keep reading with a 7-day free trial

Subscribe to The Data Letter to keep reading this post and get 7 days of free access to the full post archives.