Trapped in the Export Loop

So, you’re a human data pipeline.

You were hired to analyze data. What you actually do is move it. You click export, rename the file, drop it into a shared folder, and email the link. Then you do it again tomorrow. And the day after. At that point, the job title and the job description have fully parted ways.

This is the analyst-as-middleware problem, and it’s more common than most organizations admit. Someone with Python skills and SQL fluency spends their days downloading CSVs and managing attachment threads. No queries. No transformations. No insight generation. Just scheduled, manual data transport dressed up with an analytical job title.

Why Organizations Create Data Dead Ends

This doesn’t happen because companies are malicious. It happens because systems calcify. IT locks down database access for security reasons that made sense in 2011 and never got revisited. Legacy platforms don’t expose APIs or connect cleanly to modern tooling. Business units developed their own manual workflows years ago, and ‘how we’ve always done it’ carries more institutional weight than efficiency ever could.

The result is an informal architecture built from browser downloads, shared drives, and email attachments. Staying sharp is possible without system access. What it requires is intentional practice with the materials you already have.

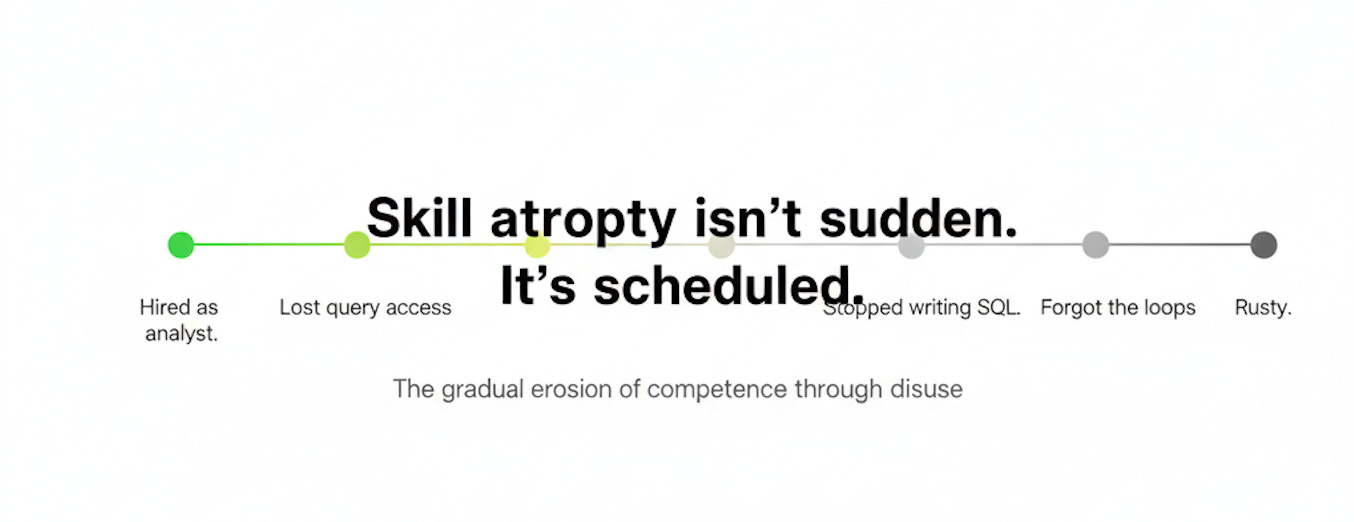

How Skill Atrophy Happens to Good Analysts

The cognitive cost isn’t obvious at first. You’re still technically working with data, but there’s a meaningful difference between analyzing data and transporting it.

When your job doesn’t require you to write queries, you stop writing them. Python loops you wrote from memory are starting to require documentation lookups. SQL syntax blurs at the edges. The joins you knew cold become joins you have to think about. Skills erode in proportion to how rarely they’re exercised, and manual export workflows exercise almost none of the skills that define analytical competence.

Six months in, you’re slower. A year in, you’re rusty. Two years in, you may start to believe the rusty version is the real one.

If your daily work has drifted away from the technical side, the previous issue is worth your time. LLM Fine-Tuning on a Budget walks through dataset preparation, how LoRA works, and how to evaluate a model when you don’t have labeled test data. It’s structured, technical, and built for people who want to stay capable.

Red Flags That Confirm You’re Trapped in Manual Export Work

Watch for these patterns in your own workflow. Your browser history is dominated by downloads, report portals, and file transfers. Shared drives function as your team’s data architecture, with folders named by date and maintained by convention rather than design. Email is your ETL pipeline, literally, attachments moving data between people because systems won’t talk to each other. You haven’t written a query against a live database in months. Your ‘analysis’ begins after someone else pulls the data and ends before anyone asks how it was prepared.

If more than two of those describe your week, you’re not doing data analysis. You’re doing data custody.

How to Preserve Your Skills Without Changing Your Job

Getting out of this situation runs through your relationship with the data you already handle, not through a job search.

Local sandboxing means running every export you receive through a script before you manually touch it. Parse it with Python. Load it into a local database. Write the query that answers the question, even if you’re just going to paste the answer into a report anyway. The analysis muscle stays active because you’re actually using it.

Shadow analysis means building things no one asked for. You have the data. Model something. Build a dataset that answers a question your team hasn’t thought to ask yet. Don’t send it anywhere. Just build it. The practice is the point.

Document the waste. Track, in hours, how much time you spend manually transporting data each week. This isn’t venting. It’s evidence. Quantified inefficiency is the clearest argument for change.

Turning Manual Exports into Automated Pipelines

Getting out of this situation runs through your relationship with the data you already handle, not through a job search. Escaping manual export workflows doesn’t always mean landing somewhere better. More often, it means automating the situation you’re already in.

‘Automation Playbook for Trapped Analysts’ gets into that next. Specific tools and workflows. No direct database access required. Just the CSV in your inbox and a decision to stop moving it by hand.

Happy Sunday! See you next week.

Paid subscribers get the full archive. That includes the vector database implementation guide covering Pinecone, Weaviate, and Chroma, with cost formulas and query optimization patterns; the advanced model drift detection guide with diagnostic workflows and threshold tuning; and the fine-tuning issue guide with dataset templates and a cost-tracking spreadsheet. The Automation Playbook for Trapped Analysts will be paid. If automating your way out of manual export work is relevant to you, it’s worth being there for it.